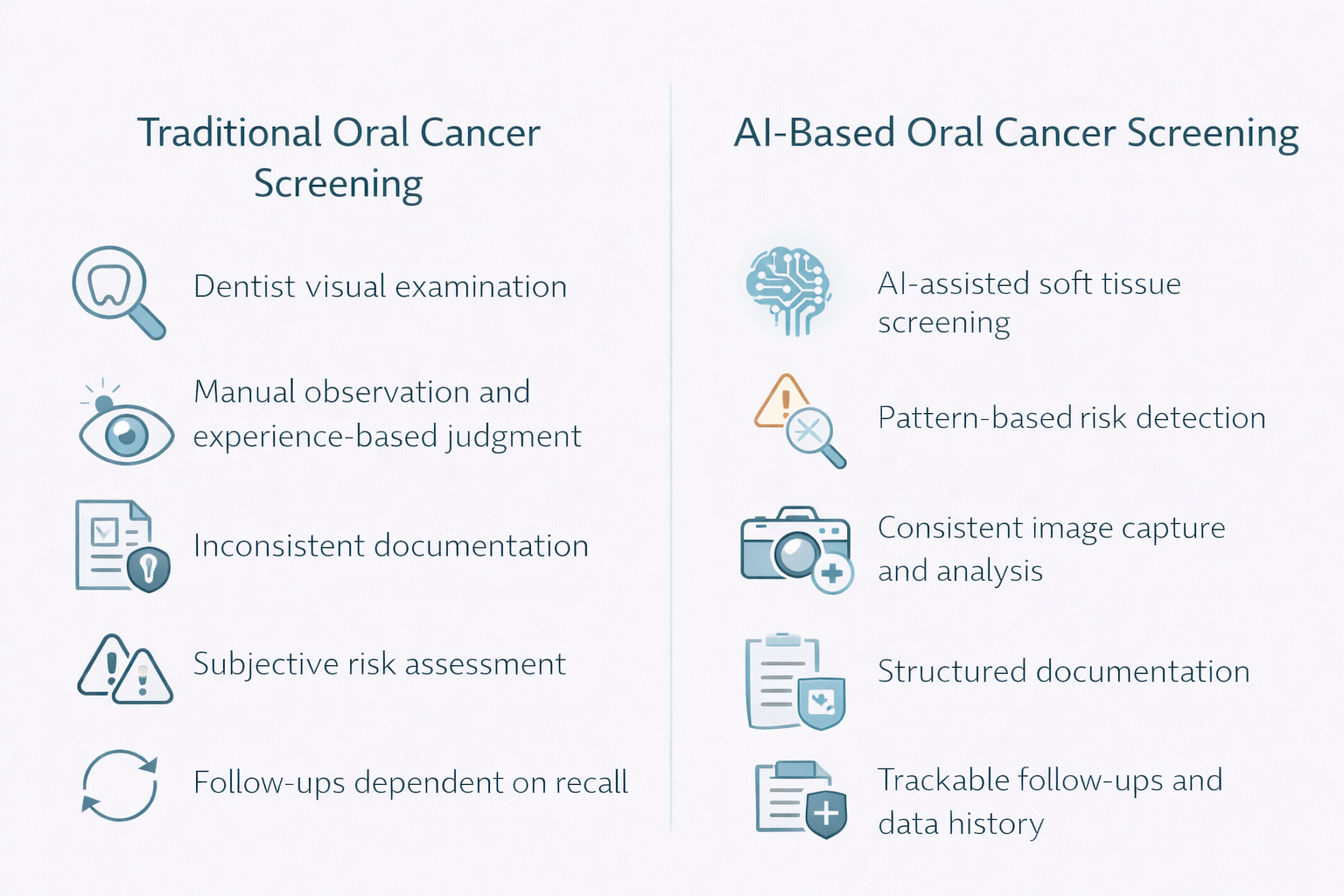

Traditional screening relies on visual and tactile examination, history-taking, and clinical judgment about what looks suspicious enough to biopsy or refer. That approach can work well in ideal conditions. The hidden problem is that clinic reality is rarely ideal: time pressure, inconsistent documentation, varying experience levels, and patients who do not return on time.

This matters because oral cancer is not a rare edge case. Globally, cancers of the lip and oral cavity were estimated at about 389,846 new cases and 188,438 deaths in 2022. When the disease burden is this large, “good when done perfectly” is not the same as “reliable across hundreds of routine days.”

The biggest operational weakness in conventional exams is variability. Two competent clinicians can look at the same lesion and differ on urgency, documentation quality, and follow-up discipline. That variability shows up in measured accuracy too. A 2022 meta-analysis of conventional oral examination reported summary estimates around 71% sensitivity and 85% specificity for dysplastic and malignant lesions.

The consequence is predictable: some high-risk lesions are missed early, and some low-risk findings create unnecessary anxiety, referrals, or repeat visits. For a clinic owner, this is not just a clinical issue. It is a workflow issue, a liability issue, and a trust issue.

In many practices, the failure point is not the moment of examination. It is the chain after it: documenting what was seen, explaining risk clearly, ensuring a referral actually happens, and tracking whether the patient returned. Traditional screening produces a finding, but often not a system for continuity.

That gap is costly in time and reputation. If a patient is told “we should watch this” without structured recall, you create silent risk. If a suspicious lesion is found but the referral pathway is informal, you create leakage. Clinics often do not notice these leaks until a case comes back late and escalated.

Artificial Intelligence in oral cancer is detection best understood as standardization and triage, not replacement. In AI oral cancer detection and AI oral lesion detection workflows, the model typically evaluates images or videos of oral mucosa to flag patterns consistent with oral potentially malignant disorders and high-risk lesions. In practical terms, AI can add three things that traditional screening often lacks: consistency, documentation, and auditability.

This is why AI vs traditional oral cancer screening is not about which is “smarter.” It is about which workflow is more repeatable under real constraints. A system that captures images consistently, applies the same decision rules every time, and stores outputs can reduce variation between clinicians and across days.

The right way to think about ai accuracy in oral cancer screening is in trade-offs. Dental AI systems can be tuned to reduce missed cases (higher sensitivity) but may generate more false positives that require clinician review. Evidence across studies suggests AI can reach performance comparable to experienced humans in certain settings, but results depend heavily on data quality, population, imaging method, and validation design.

The consequence for a practice is operational: AI can increase the number of “needs review” findings. That is not automatically bad, but it must be planned for. If your team is not ready to manage the queue, explain results, and route appropriately, you trade one failure mode for another.

Traditional screening looks inexpensive because it is embedded in the exam. But cost is not only the clinician’s time. Cost is also the downstream impact of inconsistency: repeat visits, unresolved concerns, missed follow-ups, and late-stage discovery.

Where oral cancer burden is high, these costs compound. India, for example, is often cited as carrying a very large share of global oral cancer cases, with estimates around 77,000 incident cases and 52,000 deaths annually. In high-burden contexts, reducing variation and improving follow-through is not a “nice to have.” It is an operational risk-control decision.

AI based oral cancer screening works best when it is embedded into a defined pathway:

The hidden problem is that many clinics attempt to add AI as a gadget, not as a workflow. If the output is not integrated into recall systems, referral notes, and patient communication, the clinic does not gain reliability. It gains noise.

AI can support earlier identification of suspicious lesions and early signs of oral cancer AI may be able to highlight. But biopsy and histopathology remain the confirmatory standard for diagnosis. AI is upstream. It improves the odds that the right patients are escalated sooner, with better documentation and less dependence on memory.

The consequence is also medico-legal: AI outputs should be treated as decision support, not diagnosis. Clinics should establish clear documentation language and escalation rules so the technology strengthens governance rather than creating ambiguity.

Traditional exams are not obsolete. They are essential, and they will remain the clinical anchor. The gap is that traditional screening quality is uneven across time, staff, and patient compliance. AI can narrow that gap by making screening more consistent, more documented, and easier to operationalize across a busy practice, as long as it is integrated into a follow-through pathway rather than treated as a standalone tool.

Forward-looking clinics increasingly address these operational gaps with an “intelligence layer” that connects screening to execution. Systems like scanO Engage are one example: AI soft tissue screening integrated into a dashboard for practice visibility, supported by workflow tools such as automated scheduling, digital prescriptions, smart patient calling, and disease-wise insights, so findings translate into tracked actions instead of one-time observations.

Frequently asked Question:

1. What sets AI apart from traditional oral cancer screening in day-to-day practice?

Clinicians rely on their own observation and experience in traditional screening. On the other hand, screening with AI brings consistency by spotting risk patterns for every patient during each visit.

2. Does using AI in oral cancer screening take over the dentist’s role?

Not at all. AI in oral cancer screening supports the process by spotting early risk signs, but the dentist keeps full responsibility for diagnosing and making clinical decisions.

3. Is AI better than traditional methods for finding early-stage oral cancer?

AI helps find early risks by reducing differences in human judgment. Its main advantage is creating consistent and recorded early screenings instead of depending on memory or personal opinion.

4. How does Tissue AI contribute to comparing AI and traditional oral cancer screenings?

Tissue AI studies patterns in soft tissue and points out small changes that regular exams might not catch. This adds reliable, data-driven insights to the comparison between AI and traditional methods for oral cancer screening.

5. When can AI compared to traditional oral cancer screening make a difference for a clinic?

A clinic sees better results when AI screening connects to structured follow-up processes proper record-keeping, and monitoring of patients. Using AI just as a standalone gadget or visual help does not lead to the same improvements.

An AI-powered co-author focused on generating data-backed insights and linguistic clarity.

Dr. Vidhi Bhanushali is the Co-Founder and Chief Dental Surgeon at scanO . A recipient of the Pierre Fauchard International Merit Award, she is a holistic dentist who believes that everyone should have access to oral healthcare, irrespective of class and geography. She strongly believes that tele-dentistry is the way to achieve that.Dr. Vidhi has also spoken at various dental colleges, addressing the dental fraternity about dental services and innovations. She is a keen researcher and has published various papers on recent advances in dentistry.

scanO is an AI ecosystem transforming oral health for patients, dentists, corporates, and insurers worldwide

© 2025 Trismus Healthcare Technologies Pvt Ltd