Oral cancer is often discussed as a clinical problem. In practice, it is also an operational problem.

Most poor outcomes are not driven by a lack of treatment options. They are driven by timing. When detection happens late, the clinic is forced into complex referrals, longer treatment pathways, higher dropout risk, and more difficult conversations with patients and families. When detection happens early, the pathway is simpler, faster, and more treatable.

This is the context in which Artificial Intelligence in Oral Cancer matters. Not as a replacement for diagnosis, and not as a marketing claim, but as a system-level tool that can reduce missed signals, standardize screening quality, and shorten the time between “something looks off” and “this needs urgent escalation.”

Global burden data explains why this conversation keeps returning. Cancers of the lip and oral cavity were estimated at roughly 377,000 new cases and 177,000 deaths in 2020. In India, lip and oral cavity cancer is among the leading contributors to cancer mortality in national fact sheets, reinforcing that this is not a niche concern in daily practice operations.

Dental AI does not eliminate risk factors, and it does not remove the need for biopsy or specialist evaluation. What it can do is improve the reliability of frontline screening, especially in high-volume environments where attention is fragmented and variation is normal.

A common misconception is that late oral cancer detection happens because clinicians do not know what to look for.

In reality, most dentists do know the red flags. The failure is usually upstream and procedural: inconsistent soft-tissue exams, uneven documentation, unclear thresholds for referral, and time pressure that turns screening into a quick visual glance rather than a repeatable process.

This matters because the survival gradient is steep. In US population statistics, localized oral cavity and pharynx cancers show much higher 5-year relative survival than distant disease (for example, localized near 88% vs distant near 37% in SEER stage groupings). National summaries also emphasize that diagnosing at an early, localized stage materially increases 5-year survival.

Operationally, this translates into a simple reality: the clinic that detects earlier is managing a different disease than the clinic that detects later.

Another misconception is that screening is inherently low effort. Many practices treat oral cancer screening as something that happens automatically during routine exams.

But consistency is not automatic. In busy clinics, exams are often interrupted, delegated, or shortened. Even where intent is strong, the exam can become non-standard across clinicians, chairs, and shifts. That creates two risks:

The economic cost is not just medical. It is also operational: more complex case pathways, more patient anxiety, more referral coordination, and higher reputational exposure when patients believe “this should have been caught earlier.”

The research literature on diagnostic delays supports the direction of this risk. Reviews of delay show associations between longer diagnostic delay and higher probability of advanced stage presentation, even while acknowledging evidence limitations and heterogeneity.

Many people frame AI as “automation of diagnosis.” That framing creates unrealistic expectations and unnecessary resistance.

In most real-world dental settings, the more useful framing is simpler: AI is a standardization layer for soft-tissue screening.

It can help by:

The main operational benefit is reduced variability. Even strong clinicians vary under time pressure. AI is valuable when it narrows that variability and reduces the number of “missed because it was busy” moments.

A second misconception is that AI is a single capability.

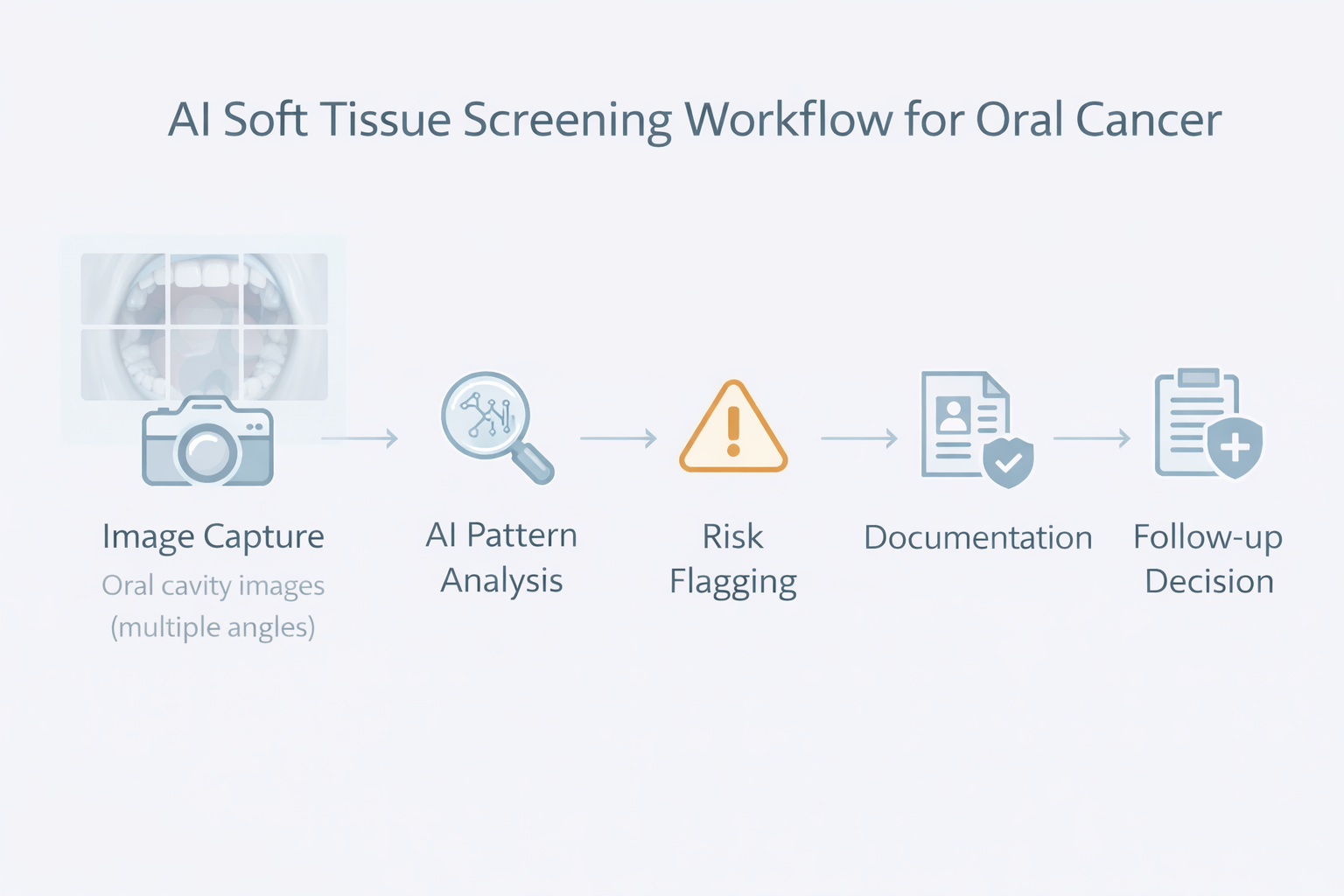

In practice, AI oral lesion detection typically involves a pipeline:

Weakness in any step can reduce performance. That is why clinic leaders should evaluate AI systems less like “a feature” and more like “a workflow instrument.” If it cannot be used consistently chairside, its theoretical accuracy is irrelevant.

The question most owners ask is blunt: does it work?

The best answer is also blunt: AI performance can be strong in controlled studies, but real-world reliability depends on data quality, population match, and workflow discipline.

Systematic reviews and meta-analyses report encouraging diagnostic performance. For example, a Frontiers review on AI detection in oral cancer reports pooled performance metrics in the range of sensitivity around 0.87 and specificity around 0.81 in included studies. A separate systematic review of deep learning in oral cancer notes that many studies report accuracy, sensitivity, and specificity often exceeding 80%, while also highlighting substantial heterogeneity and limitations in study designs and reporting.

This is the key business interpretation:

So when you hear “high accuracy,” your next question should be: “In what setting, with what images, and with what patient mix?”

Comparisons are often framed as “AI vs clinician,” which is emotionally charged and practically unhelpful.

A better comparison is traditional screening system vs AI-assisted screening system.

Traditional screening in many clinics is:

AI-assisted screening can be designed as:

If a clinic compares “a perfect clinician on their best day” to AI, AI will look unnecessary. If a clinic compares “real clinic conditions across 30 days, multiple clinicians, and interruptions” to AI-assisted standardization, AI becomes more rational.

This is why leadership should measure outcomes like:

Those are operational metrics, and they determine whether screening systems actually protect patients.

Early signs are often subtle: small mucosal changes, texture irregularities, persistent ulcers, red or white patches, and lesions that look “borderline” rather than dramatic.

AI can help by forcing attention onto small abnormalities and reducing the human tendency to normalize what is common. In high-risk populations, “common” can be exactly what you should not normalize.

But it is important to be precise: AI does not confirm cancer. It can flag patterns consistent with lesions that deserve escalation. Confirmation still depends on clinical judgment, differential diagnosis, and biopsy pathways.

Clinically, the risk is not that AI makes decisions. The risk is that clinics treat AI flags as a diagnosis, or ignore flags without a documented reasoning trail. The right posture is: AI raises a signal, the clinician owns the decision.

Screening creates value only when it changes outcomes. Outcomes change only when follow-up happens.

Many clinics already have the weak link: patients do not return. They postpone. They minimize symptoms. They get referred and disappear into the healthcare maze.

So the operational question is not “can AI screen?” It is:

This is where AI oral cancer screening benefits become tangible:

Without the follow-up loop, AI becomes a gadget. With the loop, it becomes a risk management system.

Clinic owners sometimes assume higher sensitivity is always good.

It is not, if specificity collapses.

Over-referral creates:

This is why AI should be evaluated in terms of net clinical utility, not just headline sensitivity. The best systems reduce misses without flooding your pathway with noise.

That balance is exactly why published pooled estimates of sensitivity and specificity should be read together, not selectively.

Many clinics ask the wrong trust question.

Trust is not binary. The real question is comparative: are you more comfortable trusting the variability of a manual process under time pressure, or a standardized process that adds a second set of eyes and forces documentation discipline?

AI is not perfect. Neither is real-world screening.

From a leadership perspective, the rational position is:

That is how high-performing practices adopt technology without becoming dependent on it.

Some practices are closing these gaps by treating screening as part of an integrated operational system rather than a standalone exam. Platforms such as scanO Engage represent this approach by combining AI soft tissue screening integration with practice visibility and follow-through, supported by components like an AI-powered dashboard, automated scheduling, smart patient calling, disease-wise insights, and daily workflow tooling. The point is not the feature list. The point is that screening becomes measurable, documentable, and operationally enforceable.

The core implication is simple: oral cancer outcomes are strongly shaped by process quality. If your screening process is informal, your risk is informal. If your screening process is systematic, your clinic is operating like early detection actually matters.

1. How does AI enhance oral cancer screening in everyday dental check-ups?

AI makes screenings more consistent. It identifies patterns in images from different patients and appointments. This helps cut down reliance on memory, time, or personal judgment during exams.

2. Is AI used to detect oral cancer or to assist in catching it ?

AI helps spot changes in tissues that might be suspicious. Doctors still handle the final diagnosis, which involves checking the issue, making referrals, and performing biopsies.

3. How dependable is AI when compared to traditional oral cancer screening approaches?

AI minimizes inconsistencies that often happen in manual exams by applying a standardized approach to analyzing soft tissue. This tool works alongside traditional methods instead of replacing them offering added support in busy clinics.

4. Does AI screening make dentists' work harder or more complicated?

If set up the right way, AI helps lessen mental effort. It organizes screening and record-keeping instead of creating more tasks or work to do .

5. What is Tissue AI, and how is it useful for finding oral cancer?

Tissue AI uses special software to look at 16 organized images of the mouth. It spots patterns linked to the risk of oral cancer. It helps doctors by pointing out spots that might need extra checks or follow-ups.

An AI-powered co-author focused on generating data-backed insights and linguistic clarity.

Dr. Vidhi Bhanushali is the Co-Founder and Chief Dental Surgeon at scanO . A recipient of the Pierre Fauchard International Merit Award, she is a holistic dentist who believes that everyone should have access to oral healthcare, irrespective of class and geography. She strongly believes that tele-dentistry is the way to achieve that.Dr. Vidhi has also spoken at various dental colleges, addressing the dental fraternity about dental services and innovations. She is a keen researcher and has published various papers on recent advances in dentistry.

Supported by

scanO is an AI ecosystem transforming oral health for patients, dentists, corporates, and insurers worldwide

© 2025 Trismus Healthcare Technologies Pvt Ltd