Most dentists can spot obvious disease. The operational problem is what happens in the grey zone: subtle mucosal change, inconsistent lighting, rushed documentation, and variable experience across clinicians and sites. Conventional oral examination is useful, but it is not uniformly reliable as a diagnostic test. A 2022 meta analysis reported summary estimates around 71% sensitivity and 85% specificity for detecting dysplastic or malignant lesions with conventional oral examination, which is meaningful but leaves a large miss window in real world screening.

The consequence is straightforward. When early, high risk oral lesions are missed or not escalated, detection shifts downstream into symptomatic stages, where treatment intensity, chairtime, and patient outcomes get worse.

In most practices, the hard part is not noticing that “something looks off.” The hard part is deciding whether it is low risk irritation, a lesion that needs close review, or a finding that must be referred quickly. That triage is influenced by clinician variability, patient history quality, and the simple fact that soft tissue findings are often not photographed consistently enough to compare over time.

The consequence is operational drift. Lesions get labeled as “monitor,” but monitoring is inconsistent. Referrals happen late. Documentation gaps make continuity harder, especially when patients see multiple providers or return months later with a changed lesion.

Oral cancer is not a niche problem in many South Asian settings. India is frequently cited as having a very high burden, with reviews noting it accounts for a large share of global cases.

This matters for business owners because higher prevalence changes the math of screening value. When prevalence is non trivial, the cost of missed cases is not just clinical. It becomes reputational and legal risk, plus real downstream load on scheduling, emergency visits, and complex care coordination.

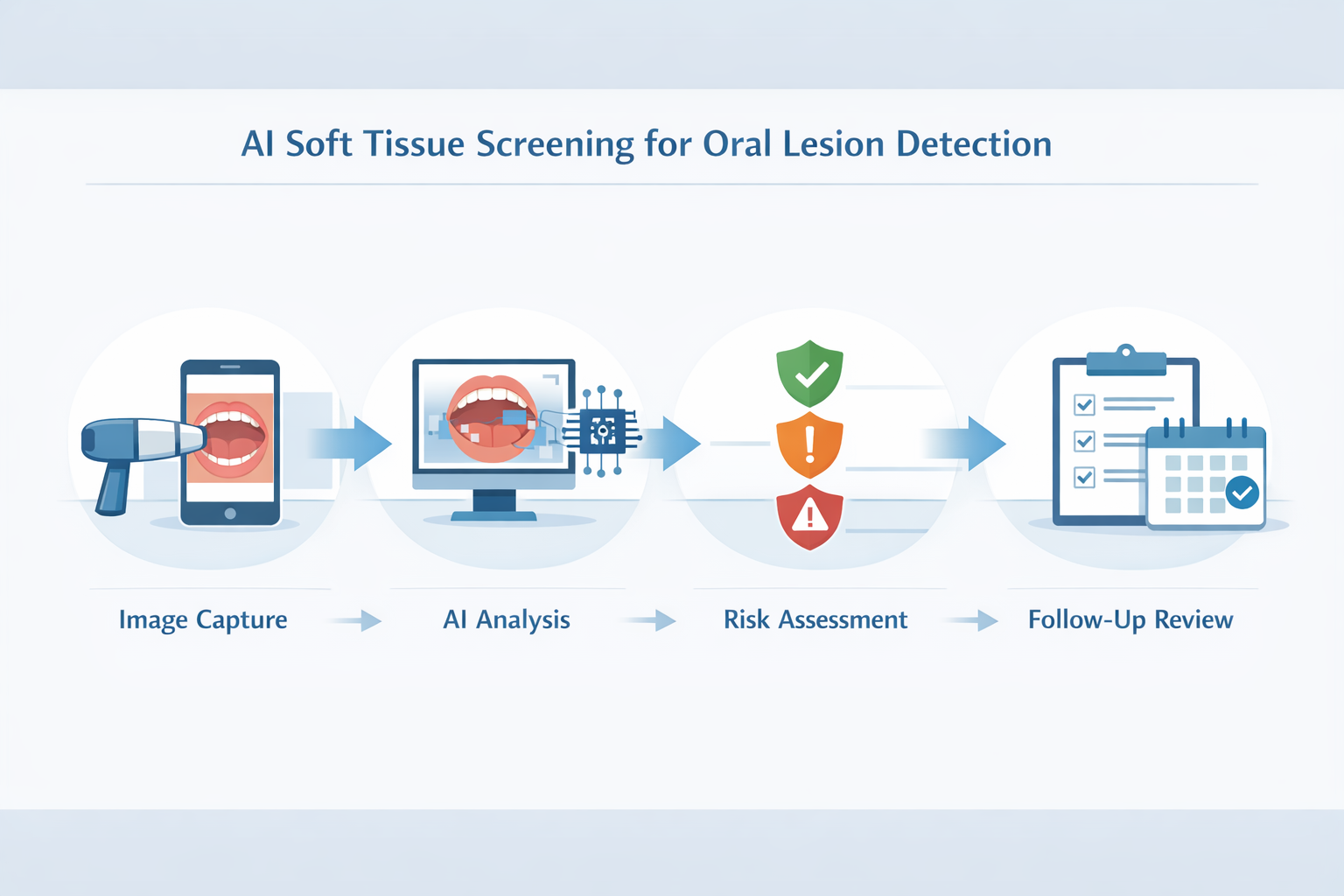

Dental AI in this context is best understood as a consistency layer, not a replacement for clinical judgment. In day to day practice, performance is lost to variability: lighting, angle, speed, memory, and documentation habits. AI systems trained on large sets of labeled images can do two useful things reliably:

First, they can flag suspicious patterns that deserve a second look, which is essentially decision support for triage. Second, they can standardize capture and classification workflows so that “monitor” becomes a trackable pathway rather than a vague note.

Evidence reviews of AI for oral cancer and potentially malignant disorders often report high performance ranges in research settings, with deep learning results commonly spanning accuracy about 81% to 99.7%, sensitivity about 79% to 98.75%, and specificity about 82% to 100% across included studies.

The consequence is not perfect diagnosis. The consequence is fewer lesions silently falling through process cracks, because the system pushes the practice toward repeatable capture, risk tagging, and escalation cues.

High numbers can create false confidence if you do not ask what the model is optimized for. Many AI systems in medical imaging show a sensitivity heavy profile, meaning they catch more potential positives but may produce more false alarms. A 2024 review of automated detection noted that many AI systems show higher sensitivity than specificity, and cited a study example reporting around 89.5% sensitivity and 67.1% specificity.

For a clinic owner, the operational question is not “Is the model perfect?” It is “Does it improve my triage discipline without overwhelming my team?” False positives create extra consult time and may increase patient anxiety if communicated poorly. False negatives create the bigger risk. The correct management approach is to treat artificial Intelligence in oral cancer as an escalation and documentation tool, with clear internal rules for re evaluation, referral thresholds, and patient communication.

Traditional screening is still the base layer because the clinician owns the exam, history, and the decision to refer. But traditional workflows often lack consistency and auditability, especially when practices are busy or multi location. Evidence syntheses show conventional oral examination can perform reasonably, but not at a level where clinics should rely on it as the only gate.

AI based oral cancer screening can add value when it is layered on top of the exam to improve three things: repeatable capture, consistent risk prompts, and longitudinal comparison. The consequence is a tighter system where early signs of oral cancer AI flags are less dependent on who happened to see the patient and how rushed the appointment was.

When you view AI oral cancer screening benefits through an operational lens, the value concentrates in predictable places:

These are not abstract benefits. They show up as fewer “we should have caught this earlier” moments and fewer follow up failures caused by unclear ownership of monitoring.

Practices that treat soft tissue screening as a process, not a one time observation, are adopting operational layers that make detection, triage, and follow through harder to break. Systems like scanO Engage are used as an operational intelligence layer by combining AI soft tissue screening integration with structured workflows such as patient visibility dashboards, disease wise insights, scheduling follow ups, digital prescriptions, smart patient calling, and billing support, so “monitor this lesion” becomes a managed pathway rather than a memory dependent task.

The strategic shift is simple: high risk oral lesions are not missed only because clinicians fail to look. They are missed because variability and weak follow through are normal in busy practices. AI oral lesion Detection is most valuable when it forces consistency into that reality, and consistency is what turns early recognition into earlier outcomes.

Subtle changes in soft tissue, those in early stages or unclear, gain the most from this approach. Lesions

often marked as "monitor" can be overlooked without a solid follow-up plan so this helps prevent that.

An AI-powered co-author focused on generating data-backed insights and linguistic clarity.

Dr. Vidhi Bhanushali is the Co-Founder and Chief Dental Surgeon at scanO . A recipient of the Pierre Fauchard International Merit Award, she is a holistic dentist who believes that everyone should have access to oral healthcare, irrespective of class and geography. She strongly believes that tele-dentistry is the way to achieve that.Dr. Vidhi has also spoken at various dental colleges, addressing the dental fraternity about dental services and innovations. She is a keen researcher and has published various papers on recent advances in dentistry.

scanO is an AI ecosystem transforming oral health for patients, dentists, corporates, and insurers worldwide

© 2025 Trismus Healthcare Technologies Pvt Ltd